OpenAI has presented the DALL-E 2 model — this is a significantly improved variant of a previously created model for generating images from a natural language description.

With DALL-E 2, OpenAI now promises higher-resolution images and lower latency when using the service. In addition, there is the ability to edit existing images and move or exchange objects in the image.

OpenAI already presented the first version of DALL-E in January 2021. The abilities presented at that time to create a visual concept from a textual description were still very limited.

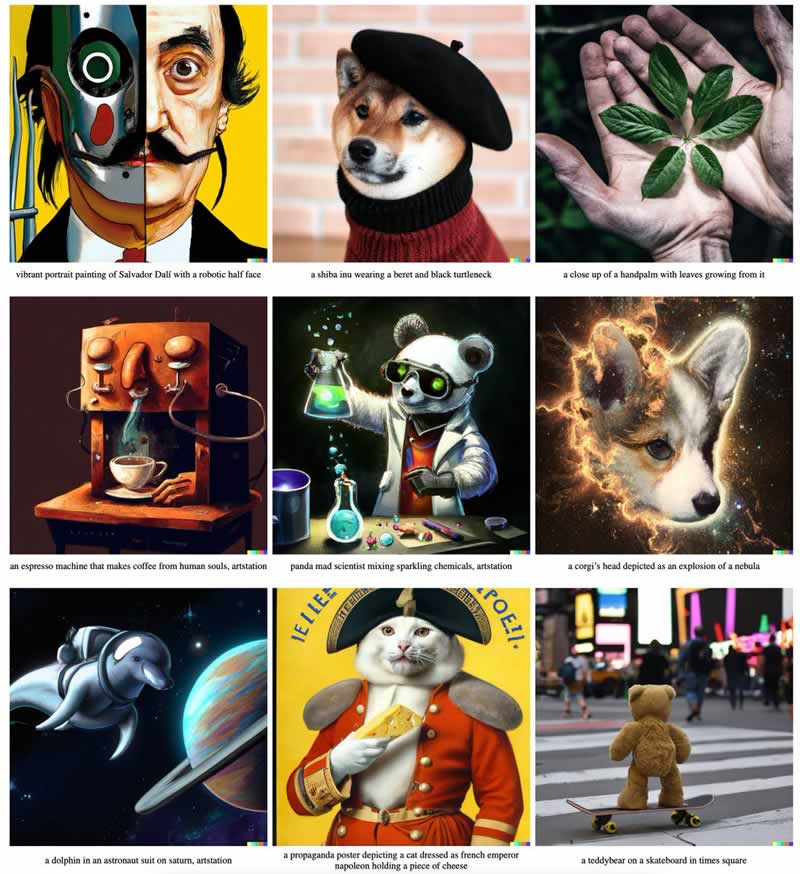

Where “DALL-E 2 can create original, realistic images and art from a text description. It can combine concepts, attributes, and styles“, OpenAI reports on its website.

In addition, the AI system is also capable of editing photos based on a written request. This function includes the possibility of eliminating elements such as shadows, reflections and textures.

Another function of the DALL-E 2 is to create “different variations” of an image by taking inspiration from the original, such as iconic works of pictorial art.

The AI has learned “the relationship between images and the text used to describe them” thanks to a process called ‘diffusion’, which starts from a pattern of random dots and gradually alters it into an image when it recognizes certain specific aspects.

As with the other OpenAI models, the DALL-E 2 has not yet been made available for public use. However, AI researchers should be able to register online for the system and then get access. As usual, the associated interface is to be made available in the future for third-party providers to create software.

Also, checkout Google,s Imagen neural network which also allows images to be generated based on a text description. Compared to DALL-E 2, Imagen is still in early-stage but images generated by Imagen have a higher quality and a better “image-text alignment” compared to the rest of the models.