Microsoft has released Orca 2, a pair of small language models that are setting new benchmarks in the AI world. These models, with their relatively modest sizes of 7 billion and 13 billion parameters, are challenging the norm by outperforming counterparts that are up to ten times their size.

Building on the foundation laid by the original 13B Orca model, which demonstrated strong reasoning abilities, Orca 2 represents a leap forward in AI efficiency. Microsoft researchers have focused on enhancing the reasoning capabilities of these smaller models, traditionally seen only in larger counterparts. This approach has led to the creation of models that are not only compact but also highly effective in complex reasoning tasks.

To underscore its commitment to the broader AI community, Microsoft has open-sourced both Orca 2 models. This decision is expected to fuel further research into developing smaller, efficient models that can perform on par with larger ones. It’s a step towards making advanced AI more accessible, especially for enterprises with limited resources.

Orca 2’s development addressed a critical gap in the AI landscape — the lack of advanced reasoning abilities in smaller models. By fine-tuning the Llama 2 base models on a highly-tailored synthetic dataset, Microsoft Research has enabled these smaller models to employ various reasoning techniques effectively. This approach differs from traditional imitation learning, allowing the models to adapt different strategies for different tasks.

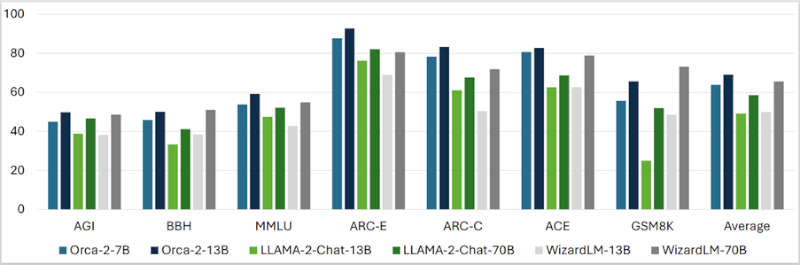

When tested across 15 diverse benchmarks, Orca 2 models showcased their prowess by largely matching or outperforming much larger models. This includes surpassing models like Llama-2-Chat-13B and 70B, and WizardLM-13B and 70B in areas such as language understanding, common-sense reasoning, and multi-step reasoning. The only exception was in the GSM8K benchmark, where WizardLM-70B performed better.

The release of Orca 2 opens up possibilities for more small, high-performing models to emerge in the AI space. This trend is already visible with recent developments like China’s 01.AI releasing a 34-billion parameter model and Mistral AI’s 7-billion parameter model, both showing impressive capabilities.