DeepSeek has captured the attention of artificial intelligence enthusiasts worldwide. The new AI model, developed in China, has positioned itself as a formidable competitor, even challenging industry leaders like OpenAI and Google. Seizing the moment, Chinese tech giant Alibaba has introduced its latest AI model, Qwen2.5-Max.

Alibaba announced the launch of Qwen2.5-Max, a Mixture of Experts (MoE) model trained on more than 20 trillion tokens. The company claims that its AI outperforms DeepSeek V3 in multiple benchmarks, particularly in general knowledge and problem-solving tasks. Alibaba also highlighted that the model was trained using advanced techniques, including Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF).

While Alibaba has been developing AI models for some time, none have generated as much buzz as DeepSeek. In response, the company took to its official X account to reaffirm its presence in the AI race, emphasizing its ability to create competitive and cutting-edge models.

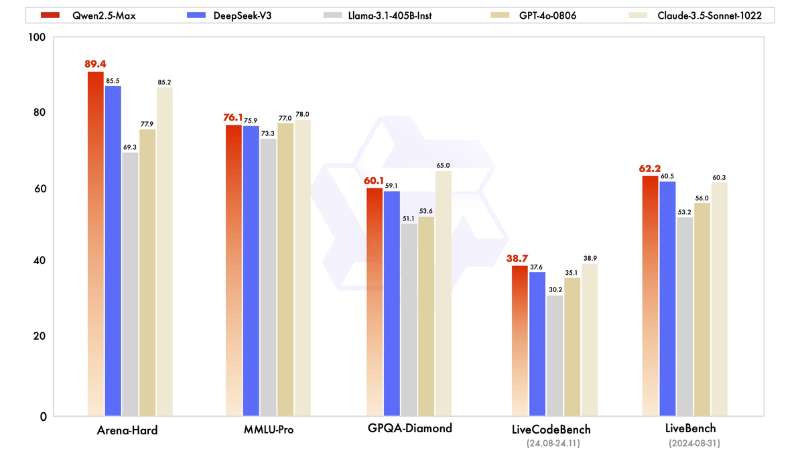

“The burst of DeepSeek V3 has attracted attention from the whole AI community to large-scale MoE models. Concurrently, we have been building Qwen2.5-Max, a large MoE LLM pretrained on massive data and post-trained with curated SFT and RLHF recipes. It achieves competitive performance against the top-tier models, and outcompetes DeepSeek V3 in benchmarks like Arena Hard, LiveBench, LiveCodeBench, GPQA-Diamond.”

Alibaba’s Qwen2.5-Max Aims to Outperform DeepSeek

Alibaba is confident that its latest AI model, Qwen2.5-Max, has what it takes to outshine competitors like DeepSeek V3. Designed to excel across a wide range of applications, Qwen2.5-Max demonstrates impressive capabilities in complex knowledge-based tasks, decision-making, and even code generation. When benchmarked against industry leaders such as DeepSeek V3, GPT-4o, and Claude-3.5-Sonnet, the model holds its ground, delivering strong performance on rigorous tests like Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond.

The creators of Qwen2.5-Max assert that their model surpasses other open-source alternatives, including DeepSeek V3, Llama-3.1-405B, and its predecessor, Qwen2.5-72B. To validate these claims, Alibaba subjected the model to a series of challenging evaluations, including college-level academic tests, decision-making scenarios, programming assessments, and general usability benchmarks. The results, according to the company, position Qwen2.5-Max as a top-tier AI solution capable of meeting diverse user needs.

For those eager to explore its capabilities, Qwen2.5-Max is now accessible to the public through Qwen Chat, a chatbot interface akin to ChatGPT and DeepSeek. This platform not only supports web searches but also offers advanced features like image, video, and artifact generation. One of its standout features is the ability to generate images with physical properties using JavaScript code — for example, a function that creates emojis whenever a user clicks on the screen.

How Qwen2.5-Max Stacks Up Against DeepSeek

Much like DeepSeek, Qwen2.5-Max leverages a machine learning technique known as Mixture of Experts (MoE) to enhance the efficiency and performance of large language models (LLMs). Instead of relying on a single neural network to process all inputs, MoE divides the model into multiple specialized subnetworks, each functioning as an “expert” in handling specific types of data.

These experts are coordinated by a routing network that determines which subnetwork is best suited to process a particular input. For instance, one expert might focus on understanding natural language, while another could specialize in technical or conversational language. Once the relevant experts have processed the input, their outputs are combined based on assigned weights to generate a final, cohesive response.

According to Alibaba, this approach allows for the development of larger, more efficient, and highly adaptable models. By activating only the necessary experts for a given task, MoE significantly reduces computational costs. Additionally, the model’s flexibility enables it to handle a wide variety of data types and tasks by dynamically adding or removing experts as needed. This adaptability makes Qwen2.5-Max a versatile tool capable of addressing diverse user requirements.

What sets Qwen2.5-Max apart is its accessibility. Unlike some of its competitors, Alibaba’s new AI model is available free of charge — same as DeepSeek. Users simply need to create an account using their Google or GitHub email to access its capabilities. Moreover, unlike platforms such as OpenAI, where premium models often come with additional costs, users can select Qwen2.5-Max as their default model and utilize its advanced features without incurring extra expenses.