Google DeepMind has developed RT-2, the first AI system that can control robots using simple language commands. RT-2 utilizes web data instead of specialized robotics training, aiming to create versatile robots that can navigate diverse real-world environments.

Robots typically need meticulous training on billions of data points to function. But RT-2 leverages large language models trained on online text and images to acquire common sense information. For example, RT-2 can recognize and dispose of trash without explicit training, having learned what trash is and how it’s handled from web data.

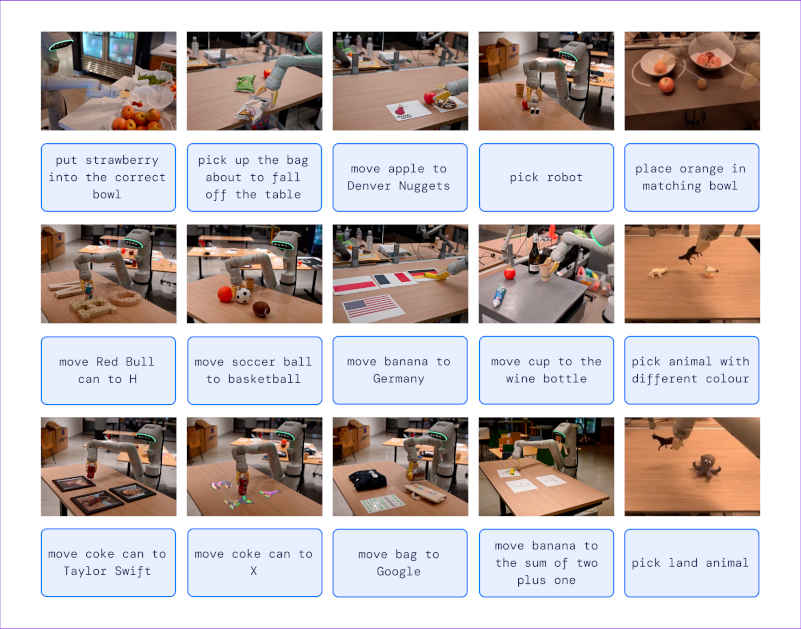

In one test, engineers asked RT-2 to “pick up an extinct animal.” Without specialization, RT-2 identified a dinosaur toy among figurines on a table, demonstrating an ability to adapt that exceeds conventional robots.

RT-2 is the latest iteration of Google’s Transformer AI architecture that excels at generalizing information. It fine-tunes Transformer models pre-trained on web data using additional robotics information. RT-2 processes camera images to directly predict appropriate robot actions.

Notably, RT-2 represents actions as tokens or word fragments, like language models do. This allows new robot skills to be taught using the same techniques as web data training.

The model can also reason through multi-step plans, like choosing an alternate tool or drink based on a situation. In over 6,000 trials, RT-2 matched a specialized prior robot called RT-1 on known tasks and nearly doubled its performance on new ones.

While RT-2 shows promise for versatile robot control through language, Google says more work is needed before real-world deployment. But the natural language approach could someday yield capable general-purpose robots that interpret information and tasks much like humans.

RT-2 does still need human oversight and has limitations in physical manipulation. But it may enable robots to carry out useful new tasks not easily programmed through traditional methods.